Silicon Valley is full of the stupidest geniuses you’ll ever meet. The problem begins in the classrooms where computer science is taught.

In November 2018, over six hundred Google engineers signed a public petition to CEO Sundar Pichai demanding that the company cease work on censored search and surveillance tools for the Chinese market, code-named “Project Dragonfly.” During the ensuing public outcry, Pichai appeared before Congress, human rights groups protested in front of corporate offices in ten countries, and the company quietly put the project on hold.

This is merely one episode in what has been a long year for Big Tech, roiled by an increasingly intense series of protests and political scandals. Salesforce, Microsoft, and Amazon workers petitioned their executives to cancel lucrative contracts with US Customs and Border Protection. Facebook was found to be lobbying Congress and employing a right-wing PR firm to quell criticism from activists. Lyft and Uber drivers protested outside corporate headquarters over the companies’ rate cuts and deactivation policies.

All this has spawned a renewed interest in the ethics of technology and its role in society, with technologists, social scientists, philosophers, and policy wonks all chiming in. Of all the factions coming to the rescue, however, the most intriguing is the academic field of computer science. Recently, for instance, Stanford University’s School of Engineering launched a “Human-Centered AI Initiative” to guide the future of algorithmic ethics, and offered, for the first time, a course on data ethics for technical students. Other universities are following suit, with similar courses, institutes, and research labs springing up across the country. Academics hope that, by better teaching the next generation of engineers and entrepreneurs, universities can produce socially conscious tech professionals and thereby fix the embattled tech sector.

Yet in positioning itself as tech’s moral compass, academic computer science belies the fact that its own intellectual tools are the source of the technology industry’s dangerous power. A significant part of the problem is the kind of ideology it instills in students, researchers, and society at large. It’s not just that engineering education teaches students to think that all problems deserve technical solutions (which it certainly does); rather, the curriculum is built around an entire value system that knows only utility functions, symbolic manipulations, and objective maximization.

_____

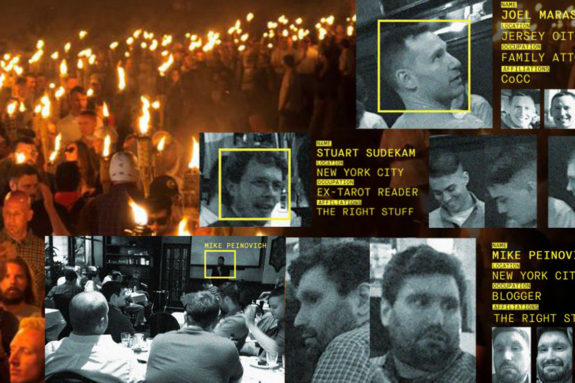

In spring 2018, Stanford offered, for the first time, a course on data ethics specifically for students with a background in machine learning and algorithms. At the time, I was a graduate student in the Computer Science department, and attended the class when I could. One particular case study was on a controversy from 2009, shortly after California’s infamous Proposition 8 was passed, which defined state-recognized marriage as being between a man and a woman. The anonymous creators of a website called Eightmaps had used the Freedom of Information Act to retrieve records of donations to organizations that supported the measure, then plotted them on a map. Visitors to the site could see the names, donation amounts, and approximate locations of individuals who had donated at least $100 to the Proposition 8 campaign. As use of the tool spread, the exposed donors received angry messages and threats of physical violence. “What could be done,” the instructor asked, “to prevent these scenarios in future elections?”

The students had many ideas. One suggested that, in the interest of protecting small donors, the $100 minimum donation threshold be increased. Another conjectured that there was some inherent trade-off between having transparent public information and preserving the privacy needed for democratic participation (including the right to donate to campaigns). Thus there might be some intermediary way to make campaign finance information more coarse-grained so as to protect individual identities, while providing aggregate information about where the donations came from. Several classmates nodded in agreement.

By this time, I had plucked up the courage to raise my hand. “Isn’t it a bit insane,” I asked, “that we’re sitting here debating at what level of granularity to visualize this data? Is this a technical problem that can be optimized to find some happy balance, or is it a political question of whether elections should be run this way, with campaign contributions, in the first place?”

I looked to my peers, but there was no response. The instructor then said, “I think that’s a signal to move on to the next topic.”

“The study of machine learning offers a stunning revelation that computer science in the twenty-first century is, in reality, wielding powers it barely understands.”

It speaks to the culture of technical education that I was not the least bit surprised by this episode—neither by the solutions offered by the students, nor the instructor’s dismissal of even a modest attempt to break out of the engineering straitjacket. The scene could have been pulled directly from Jeff Schmidt’s classic workplace polemic Disciplined Minds. “As a professional,” Schmidt observed, “the teacher is ‘objective’ when presenting the school curriculum: she doesn’t ‘take sides,’ or ‘get political.’ However, the ideology of the status quo is built into the curriculum.”

I spent four years as an undergraduate in computer science at Berkeley, two years as a master’s student at Stanford. Between the two campuses, I helped teach algorithms courses for two years, and did research in theoretical computer science for four. With the exception of a compulsory one-semester undergraduate ethics course that most attendees used as a late-afternoon nap, not once did an instructor suggest we critically examine the technical problems we were presented. So-called “soft” questions about society, ethics, politics, and humanity were silently understood to be intellectually uninteresting. They were beneath us as scientists; our job was to solve whatever problems we were given, not to question what problems we should be solving in the first place. And we learned to do this far too well.

_____

It is by now common sense that technical education gives rise to techno-solutionism—that a curriculum expounding the primacy of code and symbolic manipulations begets graduates who proceed to tackle every social problem with software and algorithms. While true, this misses the mark about what the engineering academy fundamentally teaches. The students and instructor in the ethics course were discussing a matter of politics and policy as if it were a technical problem. The issue, then, lay in their conception of the entire exercise: they reflexively committed to saving an unworkable representative democracy, and consigned themselves to inventing a clever mechanism to encourage desirable election outcomes. Techno-solutionism is the very soul of the neoliberal policy designer, fetishistically dedicated to the craft of incentive alignment and (when necessary) benevolent regulation. Such a standpoint is the effective outcome of the contemporary computational culture and its formulation as curriculum.

This culture eludes succinct description, but an initial clue is given by Stephen Boyd, a Stanford professor who teaches a popular graduate course on mathematical optimization. In the world of computing and mathematics, an “optimization problem” is any situation in which we have variable quantities we want to set, an objective function to maximize or minimize, and any constraints on the variables. For instance, a farmer may want to decide how much corn and how much wheat to grow—these two quantities are the variables; the objective function to maximize may be profit; and the farmer may be constrained by owning only so much arable land, seed corn, and so forth.

Boyd teaches this course once a year, and typically in the first lecture, he declares: “Everything is an optimization problem.” The claim reeks of techno-utilitarian naïveté, with its suggestion that every object can be modeled as a bunch of numbers, every human desire expressed in a utility function, every problem resolvable using the more or less crude calculation devices in our pockets. Yet for the four hundred students enrolled in the winter 2019 offering of this course—mostly PhD and Master’s students in computer science, electrical engineering, and applied mathematics—these assumptions underpin everything they have learned for the better part of a decade. For them, the statement is not just true, it’s banal.

Regardless of whether Boyd’s assertion is valid in some pseudo-mathematical sense, it serves as a historical marker for the state of the computational sciences. The study of computation has always been intellectually varied: within the field, there are hardware architects designing faster processors and larger storage devices; cryptographers devising protocols for secure messaging; scientists using computers to simulate physical processes; and mathematicians exploring which problems can and cannot be solved by computers. The subfield of optimization has existed since World War II, but until recently, it was just one among many. Now it’s a topic that many technical students feel compelled to spend at least one or two semesters studying.

From the standpoint of political-economic history, the ascendant popularity of optimization algorithms is a curious development. The field came to maturity in the middle of the twentieth century with the development of linear programming, a general optimization framework useful for modeling problems ranging from the allocation of goods to routing vehicles in networks. In the Soviet Union, a cohort of economists and mathematicians—including the inventor of linear programming, Leonid Kantorovich—adopted the technique as a tool for central planning beginning in the 1960s. In the West, major industrial firms began relying on optimization software to plan factory layouts and coordinate shipping and transport. Optimization also found its way into military applications; in one famous Cold War example, the US Air Force sought a strategy for bombing Soviet railways so as to isolate the USSR from the rest of Eastern Europe. A specialized case of linear programming yielded the optimal solution.

Notably, on both sides of the Iron Curtain, optimization was deployed in decisively non-market settings. Such was the first half-century of the subfield’s existence, in which optimization was identified with planning. The engineer would first decide what problem needed to be solved, and would then formulate an optimization program that modeled the problem exactly and solved it once and for all. In these contexts, optimization theory was among the least market-oriented of liberal sciences. But over the past couple of decades, optimization has found itself refashioned by a new set of industrial applications, as computers transcend their traditional role as mere number-crunchers or tools for speeding up mundane business processes. Today, the applications with which industry is so enamored are qualitatively different, relying heavily on artificial intelligence and its prominent subfield, machine learning. Businesses, militaries, and surveillance states demand algorithms that are not just fast, efficient, or secure, but also smart: Suggest an ad to this user so as to maximize the probability they’ll click on it. Identify, with the highest possible accuracy, which of these images contain humans. Predict whether this applicant will pay back their loan.

Machine learning is on the websites you visit, the device in your pocket, and next year’s smart kitchen appliances. It’s being used to judge your creditworthiness, set your bail, and decide whether to stop and frisk you on the street. Written informally in English, these tasks already sound like optimization problems; mathematically, they can indeed be expressed using variables, constraints, and objective functions, then solved using optimization software.

“Our job was to solve whatever problems we were given, not to question what problems we should be solving in the first place.

Back on campus, the AI and machine learning takeover of industry is fully reflected in course enrollment numbers. At Huang Engineering Center, just a few hundred feet from where Boyd lectures on optimization, Andrew Ng teaches CS 230: Deep Learning, a course that enrolled eight hundred students in the 2017-18 year, and yet is the smaller of Ng’s two courses. Ng is something of a celebrity in machine learning, a Twitter hype man for AI. In a scene that has become all too typical, he walks onstage to deliver the first lecture of the course, then disappears for several weeks to tend to his self-driving car startup. For most of the quarter, the lectures are doled out by a graduate student, who does a commendable job given the circumstances.

The course is about “deep neural networks,” a popular class of machine-learning models powered at their core by various optimization techniques. However, the optimization paradigms used here are not of the planning variety. The algorithm designer picks a heuristic objective function that incentivizes a good model, but there are no constraints, and there are no guarantees of obtaining a good solution. Once trained, the model is run on some sample data; if the results are poor, the designer tweaks one of dozens of parameters or refines the objective with an additional term, then tries again. Eventually, we are promised, we will arrive at a trained neural network that accurately executes its desired task.

The whole process of training a neural net is so ad hoc, so embarrassingly unsystematic, that students often find themselves perplexed as to why these techniques should work at all. A friend who also took the course once asked a teaching assistant why deep learning fares well in practice, to which the instructor responded: “Nobody knows. It just does.”

For a field that touches so much of our daily lives and makes such sweeping claims about its ability to solve our problems, the study of machine learning offers a stunning revelation that computer science in the twenty-first century is, in reality, wielding powers it barely understands. The only other field that seems to simultaneously know so much and so little is economics.

In fact, isn’t this free-wheeling, heuristic form of optimization reminiscent of how economics is understood today? Rather than optimization as planning, we seek to unleash the power of the algorithm (the free market). When the outcomes are not as desired, or the algorithm optimizes its objective (profit) much too zealously for our liking, we meekly correct for its excesses in retrospect with all manner of secondary terms and parameter tuning (taxes, tolls, subsidies). All the while, the algorithm’s inner workings are opaque and its computational power is described in terms of magic, evidently understandable only by a gifted and overeducated class of technocrats.

Upon entering the “real world,” the perspective acquired through this training melds seamlessly into the prevailing economic ideology. After all, what is neoliberal capitalism but a system organized according to a particularly narrow kind of optimization framework? In school, we were told that any problem could be resolved by turning the algorithmic knobs in just the right way. After graduating, this translates into a belief that, to the extent that society has flaws, they can be remedied without systemic change: if capital accumulation is the one true objective, and the market is an infinitely malleable playground, then all that’s needed is to give individual agents the proper incentives. To reduce plastic use, add a surcharge on grocery bags. To solve the housing crisis, relax constraints on luxury apartment developers. To control pollution, put a market price on it using cap and trade.

At a high level, the computationalist interpretation of the modern economy goes something like this: an economy can be thought of as one gigantic, distributed optimization problem. In its most basic form, we want to decide what to produce, how much to pay workers, and which goods should be allocated to whom—these are the variables in the optimization program. The constraints consist of any natural limits on resources, labor, and logistics. In primitive laissez-faire capitalism, the objective to be maximized is, of course, the total profit or product.

The original sin of the capitalist program, then, is that it optimizes not some measure of social welfare or human satisfaction, but a quantity that could only ever be a distant proxy for those goals. To ameliorate the extensive damage caused by this poor formulation, today’s liberal democracies seek to design a more nuanced program. Profit still constitutes the first term of the objective, but is now accompanied by a daunting array of endlessly tweakable secondary terms: progressive taxation on incomes to slow wealth accumulation, Pigovian taxes and subsidies to guide consumer behavior, and financialized emissions markets to put a dent in the planet’s rapid disintegration. Whenever market-based carrots and sticks fall short, governments attempt to impose regulations, introducing additional constraints. These policy fixes follow precisely the same logic as the in-class exercises in mathematical gimmicks.

_____

Today, there is much debate about the societal role that algorithms play. Not too long ago, it was assumed by most that algorithms were, if not politically neutral, at least not a fundamental hazard to humans. To be sure, the transformation wrought by intelligent systems was regarded as highly destructive and disruptive; but, like the industrial revolution that came long before it, this was initially regarded as an impersonal fact of economic history, not something that actively discriminated against particular populations or served as a ruling-class project. In 2013, if someone brought up the “bias” of your machine learning model, it was safe to assume they were referring to its so-called “generalization error”—a purely statistical matter devoid of moral judgment.

Then, just four or five years ago, the floodgates opened. Mainstream newspapers published exposés on algorithmic black boxes kicking people off social services; researchers churned out papers detailing how predictive accuracy came at the expense of fairness. The traditional view, that algorithms make neutral, objective decisions, has now been exposed as fraudulent; in its place, a new tech-humanist consensus has emerged, which acknowledges that in practice, algorithms encode the biases and peculiarities of their designers and, in the case of machine learning, the data used to train them. In other words, “garbage in, garbage out.”

Yet in some sense, this view is in fact the ultimate apologetics for computational tyranny disguised as woke criticism. It implicitly maintains that algorithms, aside from the faults of their human programmers and impurities in their training data, are in principle value-free. In this reckoning, computer science itself is non-ideological: it merely seeks to improve and automate that which already exists.

But isn’t this precisely the regime of ideology today? The late Mark Fisher described the phenomenon of “capitalist realism” as the pervasive feeling that capitalism is the only viable economic and social system, and that alternatives are either unimaginable or inevitably result in reeducation camps and gulags. Once we accept that political history has effectively ended, the only remaining task is to fine-tune the system we have to the best of our ability.

For decades, the contributions of academia to the capitalist-realist regime came mostly by way of economics, led in the United States by its ultra-liberal Chicago School proponents. But for the ruling classes, this carried a major limitation: economics is, despite efforts to obfuscate this fact, a political arena by definition, open to debate and struggle. This is where computer science has the upper hand: it teaches the axioms and methods of advanced capitalism, stripped of the pesky political questions that might arise in economics or other social sciences. In its current form, computer science is a successful indoctrination vehicle for industry and state precisely because it appears as its opposite: a value-free field that embodies both rigorous mathematics and pragmatic engineering. It is the ideal purveyor of capitalist realism for a skeptical age; a right-wing science that thrives in our post-ideological era.

How, then, should today’s revolutionaries relate to the computer and its sciences? To begin to craft an answer, we should first examine the state of affairs.

In industry, the prevailing debate at the moment features two camps: on the one hand, the traditional elite circle of corporate titans, executives, investors, and their devoted followers; on the other, the tech humanists—a loose alliance of do-gooders in government, media, nonprofits, academia, and certain repentant figures within the industry itself—who believe Silicon Valley can be politely tamed through enlightened policy, reformed engineering practices, and ethics training. Both parties share the same vision: that of a society dominated by a technical aristocracy that exploits and surveils the rest of us. One of them comes with a smiley face. Needless to say, to leave questions of the development of and control over technology to either of these groups would mean succumbing to an unthinkably oppressive future.

In higher education and research, the situation is similar, if further removed from the harsh realities of technocapitalism. Computer science in the academy is a minefield of contradictions: a Stanford undergraduate may attend class and learn how to extract information from users during the day, then later attend an evening meeting of the student organization CS+Social Good, where they will build a website for a local nonprofit. Meanwhile, a researcher who attended last year’s Conference on Economics and Computation would have sat through a talk on maximizing ad revenue, then perhaps participated the next morning in a new workshop on “mechanism design for social good.”

It is in this climate that we, too, must construct our vision for computer science and its applications. We might as well start from scratch: in a recent article for Tribune, Wendy Liu calls to “abolish Silicon Valley.” By this she means not the naive rejection of high technology, but the transformation of the industry into one funded, owned, and controlled by workers and the broader society—a people’s technology sector.

Silicon Valley, however, does not exist in an intellectual vacuum; it depends on a certain type of computer science discipline. Therefore, a people’s remake of the Valley will require a people’s computer science. Can we envision this? Today, computer science departments don’t just generate capitalist realism—they are themselves ruled by it. Only those research topics that carry implications for profit extraction or military applications are deemed worthy of investigation. There is no escaping the reach of this intellectual-cultural regime; even the most aloof theoreticians feel the need to justify their work by lining their paper introductions and grant proposals with spurious connections to the latest industry fads. Those who are more idealistic or indignant (or tenured) insist that the academy carve out space for “useless” research as well. However, this dichotomy between “industry applications” and “pure research” ignores the material reality that research funding comes largely from corporate behemoths and defense agencies, and that contemporary computer science is a political enterprise regardless of its wishful apolitical intentions.

In place of this suffocating ideological fog, what we must construct is a notion of communist realism in science: that only projects in direct or indirect service to people and planet will have any hope of being funded, of receiving the esteem of the research community, or even of being considered intellectually interesting. What would a communist computer science look like? Can we imagine researchers devising algorithms for participatory economic planning? Machine learning for estimating socially necessary labor time? Decentralized protocols for coordinating supply chains between communes?

Allin Cottrell and Paul Cockshott, two of the few contemporary academics who tackle problems of computational economics in non-market settings, had this to say in a 1993 paper:

Our investigations enable us to identify one component of the problem (with economic planning): the material conditions (computational technology) for effective socialist planning of a complex peacetime economy were not realized before, say, the mid-1980s. If we are right, the most notorious features of the Soviet economy (chronically incoherent plans, recurrent shortages and surpluses, lack of responsiveness to consumer demand), while in part the result of misguided policies, were to some degree inevitable consequences of the attempt to operate a system of central planning before its time. The irony is obvious: socialism was being rejected at the very moment when it was becoming a real possibility.

Politically, much has changed since these words were written. The takeaway for contemporary readers is not necessarily that we should devote ourselves to central planning once more; rather, it’s that our moment carries a unique mixture of ideological impasse and emancipatory potential, ironically both driven in large part by technological development. The cold science of computation seems to declare that social progress is over—there can only be technological progress. Yet if we manage to wrest control of technology from Silicon Valley and the Ivory Tower, the possibilities for postcapitalist society are seemingly endless. The twenty-first-century tech workers’ movement, a hopeful vehicle for delivering us towards such prospects, is nascent—but it is increasingly a force to be reckoned with, and, at the risk of getting carried away, we should start imagining the future we wish to inhabit. It’s time we began conceptualizing, and perhaps prototyping, computation and information in a workers’ world. It’s time to start conceiving of a new left-wing science.